Deploying GPT-J Models on a Telegram Bot with Hugging Face Hub - For Free

Table of Contents

💥 Motivation

tip

By the end of this post you will learn how to:

- Set up a Telegram bot with a

Pythonwrapper library. - Use the Gradio API to access the GPT-J model prediction.

- Host the Telegram bot on Hugging Face

Spaces.

By the end of this post, you’ll have your own Telegram bot that has access to the GPT-J-6B model. All for free.

Deploying a state-of-the-art (SOTA) GPT-like language model on a chatbot can be tricky.

You might wonder how to access to the GPT model? Or which infrastructure to host the bot and the model? Should it be serverless? AWS? Kubernetes? 🤒

Yada.. yada.. yada..

I get it. Things get complicated quickly. It’s not worth going down the rabbit hole especially if you’re only experimenting or prototyping a feature.

In this post, I will show you how I deploy a SOTA GPT-J model by EleutherAI on a Telegram bot.

For FREE🚀.

By the end of this post, you’ll have your very own Telegram bot that can query the GPT-J model with any text you send it 👇

If that looks interesting, let’s begin 👩💻

🤖 Token From the Mighty BotFather

BotFather who holds the key to the world of bots 🤖

First, you must have a Telegram account. Create one here. It’s free.

Next, set up a bot that is associated with your Telegram account.

For that, let’s consult the mighty BotFather and initiate the bot creation.

This link brings you to the BotFather.

Alternatively, type BotFather in the Telegram search bar.

The first result leads you to the BotFather.

Next, send /start to the BotFather to start a conversation.

Follow the instructions given by the BotFather until you obtain a token for your bot.

warning

Keep this token private. Anyone with this token has access to your bot.

This video provides a good step-by-step visual guide on how to obtain a token from the BotFather.

🐍 Python Telegram Bot

Telegram wasn’t written with Python.

But we ❤️ Python!

Can we still use Python to code our bot?

✅ Yes! With a wrapper library like python-telegram-bot.

python-telegram-bot provides a pure Python, asynchronous interface for the Telegram Bot API.

It’s incredibly user-friendly too.

You can start running your Telegram bot with only 8 lines of code 👇

| |

The above code snippet creates a Telegram bot that recognizes the /start command (specified on line 8).

Upon receiving the /start command it calls the hello function on line 4 which replies to the user.

Here’s how it looks like if you run the code 👇

Yes! It’s that simple! 🤓

Now all you have to do is specify other commands to call any other functions of your choice.

Before we do that, let’s first install python-telegram-bot via

pip install python-telegram-bot==13.11

warning

python-telegram-bot is under active development. There are breaking changes starting with version 20 and onward. For this post, I’d recommend sticking with version <20.

To run the bot, save the 8-line code snippet above into a .py file and run it on your computer.

Remember to replace 'YOUR-TOKEN' on line 7 with your own token from the BotFather.

I will save the codes as bot.py on my machine and run the script with

python bot.py

Voila!

Your bot is now live and ready to chat.

Search for your bot on the Telegram search bar, and send it the /start command.

It should respond by replying a text back to you, just like in the screen recording above.

💡 GPT-J and the Gradio API

We’ve configured our Telegram bot. What about the GPT-J model? Unless you have a powerful computer that runs 24/7, I wouldn’t recommend running the GPT-J model on your machine (although you can).

I recently found a better solution that you can use to host the GPT-J model. Anyone can use it, it runs 24/7, and best of all it’s free!

Enter 👉 Hugging Face Hub.

Hugging Face Hub is a central place where anyone can share their models, dataset, and app demos. The 3 main repo types of the Hugging Face Hub include:

- Models - hosts models.

- Datasets - stores datasets.

- Spaces - hosts demo apps.

The GPT-J-6B model is generously provided by EleutherAI on the Hugging Face Hub as a model repository. It’s publicly available for use. Check them out here.

You can interact with the model directly on the GPT-J-6B model repo, or create a demo on your Space. In this post, I will show you how to set up a Gradio app as a demo on Hugging Face Space to interact with the GPT-J-6B model.

First, create a Space with your Hugging Face account.

If you’re unsure how to do that, I wrote a guide here.

Next, create an app.py file in your Space repo.

Here’s the content of app.py 👇

| |

On line 22 we load the GPT-J-6B model from the EleutherAI model hub and serve the predictions on the Space with a Gradio app.

Check out my Gradio demo app on my Space. Or try them out 👇

Other than having a user interface, hosting a Gradio app on Space also allows you to use the API endpoint to access the app from elsewhere. For example, I’ve used this feature to get model predictions on my Android app here.

To view the API, click on “view the api” button at the bottom of the Space. It brings you to the API page that shows you how to use the endpoint.

All we need to do now is send a POST request from our Telegram bot to access the GPT-J model prediction.

def get_gpt_response(text):

r = requests.post(

url="https://hf.space/embed/dnth/gpt-j-6B/+/api/predict/",

json={"data": [text]},

)

response = r.json()

return response["data"][0]

Let’s add this function into the bot.py file we created earlier.

Here’s mine

| |

I’m gonna save this as app.py on my computer and run it via

python app.py

Now, your bot will respond to /start command by calling the hello function (Configured on line 32).

Additionally, it will also respond to all non-command texts by calling the respond_to_user function (Configured on line 33).

That is how we get GPT-J’s response through the Telegram bot 🤖. If you’ve made it to this point, congratulations! We’re almost done!

tip

If you wish to run the Telegram bot on your machine you can stop here. Bear in mind you need to keep your machine alive 24/7 for your bot to work.

But, if you wish to take your bot to the next level 🚀 then read on 👇

🤗 Hosting Your Telegram Bot

A little-known feature that I discovered recently is that you can host your Telegram bot on Hugging Face Spaces 🤫.

If you create a new Space, upload the app.py script and a requirement.txt file, it will work out of the box!

The contents of requirements.txt are

python-telegram-bot==13.11

requests==2.27.1

If all is well, the Space will start building, and your bot now functional. Now you don’t have to keep your computer alive 24/7 to run the bot.

I’m not sure if this is a feature or a bug, but this is pretty neat eh? Free hosting for your bots! Now let’s create Skynet 🤖

warning

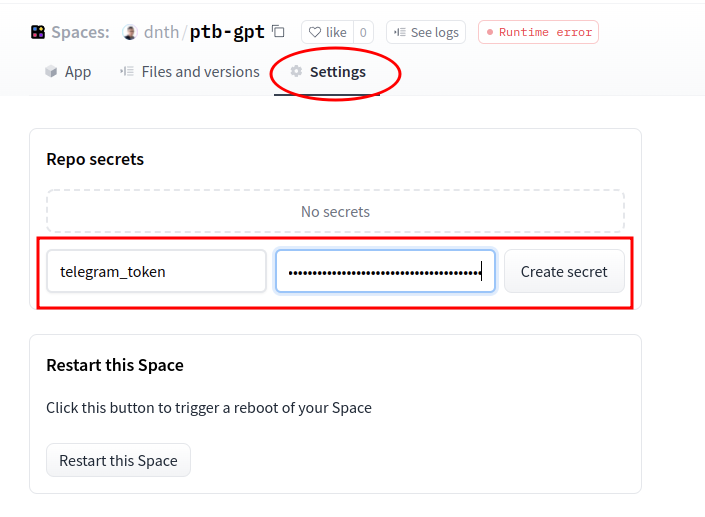

Jokes aside, make sure you don’t expose your Telegram token by putting them in the source code. To hide your token, create an environment variable for it.

On your Space, click on the Settings tab and enter the Name and Value of the environment variable.

Let’s put the name as telegram_token and the value, your Telegram token.

On your app.py change line 31 to the following

updater = Updater(os.environ['telegram_token'])

Now, you can freely share your codes without exposing your Telegram token!

For completeness, you can view my final app.py here.

🎉 Conclusion

In this post, I’ve shown you how easily you can leverage SOTA models such as the GPT-J-6B and deploy it live on a Telegram bot.

tip

We’ve walked through how to:

- Set up a Telegram bot with a

Pythonwrapper library. - Use the Gradio API to access the GPT-J model prediction.

- Host the Telegram bot on Hugging Face

Spaces.

Link to my Telegram bot here - Try it out.

The end result 👉 a 24/7 working Telegram bot that has access to the GPT-J-6B model 🥳

For FREE 🚀

That’s about a wrap! Congratulations on making it! So, what’s next?

tip

Here are some of my suggestions to level-up your bot:

I’d love to see what you create. Tag me in your Twitter/LinkedIn post! 😍

🙏 Comments & Feedback

I hope you’ve learned a thing or two from this blog post. If you have any questions, comments, or feedback, please leave them on the following Twitter post or drop me a message.

Deploying GPT-like language models on a chatbot is tricky.

— Dickson Neoh 🚀 (@dicksonneoh7) May 20, 2022

You might wonder

• How to access the model?

• Where to host the bot?

In this 🧵I walk you through how easily I deployed a GPT-J-6B model by #EleutherAI on a #Telegram bot with @huggingface and @Gradio.

For FREE 🚀 pic.twitter.com/z0uvnxksWt