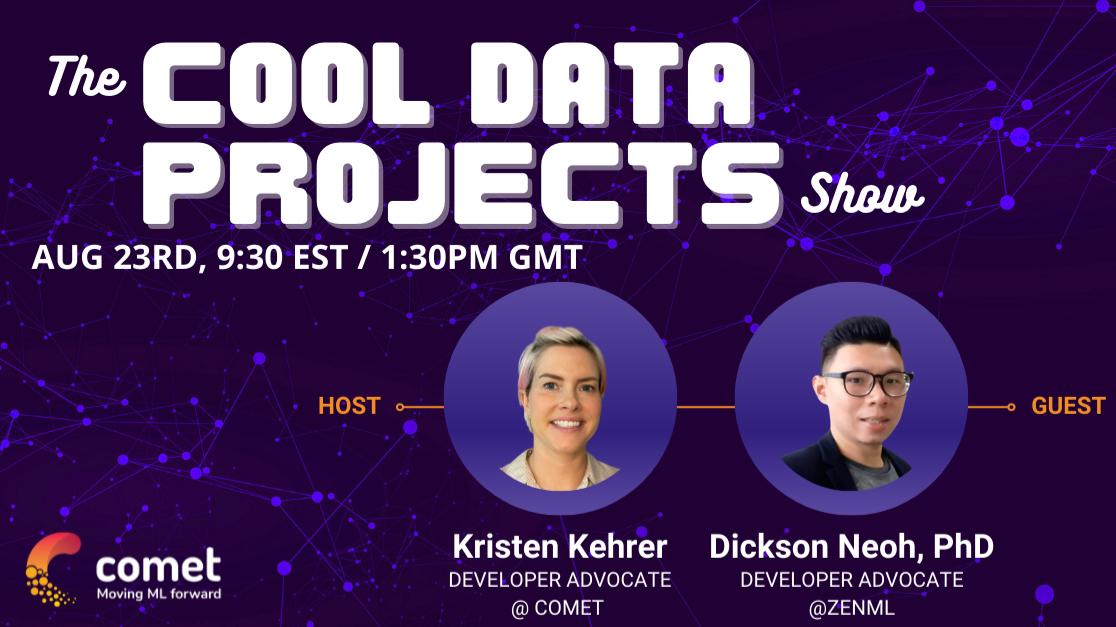

Cool Data Projects Show with Kristen - Gun Detection on CPU

Table of Contents

Table of Contents

🔥 Introduction

The rapid advancement in computer vision technology has led to the development of sophisticated models that can perform complex tasks, such as object detection and segmentation, with high accuracy. However, these models often require high computational resources and can be slow when running on a CPU. This can pose a challenge for real-time applications, such as surveillance and security, where quick detection and analysis of objects is critical.

In this talk, we will discuss a demonstration of detecting pistols in real-time using a CPU. We will also outline the methodology and lessons learned from this project and provide some advice for those looking to implement similar computer vision models on a CPU.

🛗 Methodology

The methodology used in this project involved optimizing the computer vision model and the hardware configuration to ensure fast inference speeds on a CPU. This involved several key steps, including:

Model selection: We selected a state-of-the-art object detection model that was well-suited for real-time applications and had good accuracy.

Model optimization: We optimized the model architecture and parameters to reduce the computational requirements and improve the inference speed.

Hardware optimization: We configured the hardware to ensure that the CPU was being utilized efficiently and that the memory and storage requirements were optimized.

Testing and validation: We tested and validated the system using real-world data to ensure that it was working as expected and that the accuracy and inference speed met the desired requirements.

✅ Lessons Learned

Here are some of the lessons I learned:

Choose the right model: Select a state-of-the-art object detection model that is well-suited for real-time applications and has good accuracy.

Optimize the model: Optimize the model architecture and parameters to reduce the computational requirements and improve the inference speed.

Configure the hardware properly: Configure the hardware to ensure that the CPU is being utilized efficiently and that the memory and storage requirements are optimized.

Test and validate using real-world data: Test and validate the system using real-world data to ensure that it is working as expected and that the accuracy and inference speed meet the desired requirements.

💫 Conclusion

Running computer vision models on a CPU for fast inference speeds is a challenging but achievable goal. By following the steps outlined in this post, such as optimizing the model and hardware configuration and testing and validating the system using real-world data, you can achieve high accuracy and minimal latency in real-time applications. This can have significant practical implications for industries such as surveillance and security, where quick detection and analysis of objects is critical.